Setting up Consul and Nomad in Hetzner cloud

I've been playing around with setting up infrastructure for my own projects (spoiler - I haven't decided what to use yet). Things have changed since I last blogged. One of them is that I've burned all my AWS credits, and I'm trying to go back in time, and use simple virtual machines for my pet projects.

I was using Hetzner Cloud long before I became an AWS Certified Solutions Architect, and now I feel the need to go deeper in understanding how things work. And opened up to the public (thanks to one of my friends, who asked, why I'm hiding that) the tool that allowed me deploying web-services long before I started adopting GitHub actions for CI/CD. You can find it here - https://github.com/abarbarov/nabu (please keep in mind, that all the IP addresses, projects and private keys are obsolete, in case you want to hack around).

And now I stop talking and start scripting.

You will need an API key to use Hetzner with Terraform. Go to the Hetzner console and create one if you don't already have one.

Create a new folder to store all your scripts. And let's start with the all-in-one main.tf. You can split it up if you like. I keep it in a single file for simplicity (of writing this post):

terraform {

required_providers {

hcloud = {

source = "hetznercloud/hcloud"

version = "1.42.1"

}

}

}

provider "hcloud" {

token = var.hetzner_token

}

variable "project" {

type = string

description = "Project name"

}

variable "hetzner_token" {

type = string

description = "Hetzner API Token"

}

variable "private_network_zone" {

type = string

description = "Private network zone"

default = "eu-central"

}

variable "private_network_cidr" {

type = string

description = "CIDR of the private network"

default = "10.0.0.0/16"

}

variable "nodes" {

type = map(object({

private_ip = string

location = string

type = string

}))

description = "Nodes setup"

default = {

0 = {

private_ip = "10.0.0.3"

location = "fsn1"

type = "server"

}

1 = {

private_ip = "10.0.0.4"

location = "fsn1"

type = "server"

}

2 = {

private_ip = "10.0.0.5"

location = "fsn1"

type = "server"

}

// 3 = {

// private_ip = "10.0.0.6"

// location = "fsn1"

// type = "client"

// }

}

}

variable "enable_nomad_acls" {

type = bool

description = "Bootstrap Nomad with ACLs"

default = true

}

variable "load_balancer" {

type = object({

type = string

private_ip = string

})

description = "Load balancer settings"

default = {

type = "lb11"

private_ip = "10.0.0.2"

}

}

variable "generate_ssh_key_file" {

type = bool

description = "Defines whether the generated ssh key should be stored as local file."

default = false

}

variable "consul_version" {

type = string

description = "Consul version to install"

default = "1.16.0-1"

}

variable "nomad_version" {

type = string

description = "Nomad version to install"

default = "1.6.0-1"

}

// SSH

resource "local_file" "private_key" {

count = var.generate_ssh_key_file ? 1 : 0

content = tls_private_key.machines.private_key_openssh

filename = "${path.root}/machines.pem"

file_permission = "0600"

}

resource "tls_private_key" "machines" {

algorithm = "ED25519"

rsa_bits = 4096

}

resource "hcloud_ssh_key" "ssh_key" {

name = "${var.project}-ssh-key"

public_key = tls_private_key.machines.public_key_openssh

}

// NETWORK

resource "hcloud_network" "private_network" {

name = "${var.project}-network"

ip_range = var.private_network_cidr

}

resource "hcloud_network_subnet" "network" {

network_id = hcloud_network.private_network.id

type = "cloud"

network_zone = var.private_network_zone

ip_range = var.private_network_cidr

}

// SERVERS

resource "hcloud_server" "nodes" {

depends_on = [hcloud_network_subnet.network]

for_each = var.nodes

name = "${var.project}-${each.value.type}-${each.key}"

image = "ubuntu-22.04"

server_type = "cax11"

location = each.value.location

ssh_keys = [hcloud_ssh_key.ssh_key.id]

labels = {

"${var.project}-${each.value.type}-node" = "any"

}

network {

network_id = hcloud_network.private_network.id

ip = each.value.private_ip

}

public_net {

ipv6_enabled = true

ipv4_enabled = true

}

user_data = templatefile("${path.module}/scripts/common_setup.sh", {

CONSUL_VERSION = var.consul_version

NOMAD_VERSION = var.nomad_version

})

provisioner "remote-exec" {

inline = [

"echo 'Waiting for cloud-init to complete...'",

"cloud-init status --wait > /dev/null",

"echo 'Completed cloud-init!'"

]

connection {

type = "ssh"

host = self.ipv6_address

user = "root"

private_key = tls_private_key.machines.private_key_openssh

}

}

}

resource "hcloud_server_network" "server_network" {

for_each = var.nodes

network_id = hcloud_network.private_network.id

server_id = hcloud_server.nodes[each.key].id

ip = each.value.private_ip

}

resource "null_resource" "node_setup" {

for_each = var.nodes

triggers = {

"vm" = hcloud_server.nodes[each.key].id

}

connection {

type = "ssh"

user = "root"

private_key = tls_private_key.machines.private_key_openssh

host = hcloud_server.nodes[each.key].ipv6_address

}

provisioner "file" {

content = templatefile("${path.module}/scripts/${each.value.type}_setup.sh", {

ENABLE_NOMAD_ACLs = var.enable_nomad_acls

SERVER_COUNT = 3

IP_RANGE = var.private_network_cidr

SERVER_IPs = jsonencode(["10.0.0.3", "10.0.0.4", "10.0.0.5"])

})

destination = "setup.sh"

}

provisioner "remote-exec" {

inline = [

"chmod +x setup.sh",

"./setup.sh"

]

}

}

// LOAD BALANCER

resource "hcloud_load_balancer" "load_balancer" {

name = "${var.project}-load-balancer"

load_balancer_type = "lb11"

location = "fsn1"

}

resource "hcloud_load_balancer_target" "load_balancer_target" {

depends_on = [hcloud_load_balancer.load_balancer]

for_each = var.nodes

type = "server"

load_balancer_id = hcloud_load_balancer.load_balancer.id

server_id = hcloud_server.nodes[each.key].id

use_private_ip = true

}

resource "hcloud_load_balancer_network" "server_network_lb" {

load_balancer_id = hcloud_load_balancer.load_balancer.id

network_id = hcloud_network.private_network.id

ip = var.load_balancer.private_ip

}

resource "hcloud_load_balancer_service" "load_balancer_service" {

load_balancer_id = hcloud_load_balancer.load_balancer.id

protocol = "http"

listen_port = 80

destination_port = 4646

http {

sticky_sessions = true

}

health_check {

protocol = "http"

port = 4646

interval = 10

timeout = 5

retries = 3

http {

path = "/v1/status/leader"

status_codes = [

"2??",

"3??",

]

}

}

}

output "server_nodes" {

value = {

for k, node in hcloud_server.nodes : k => {

name = node.name

ipv4_address = node.ipv4_address

ipv6_address = node.ipv6_address

internal_ip = var.nodes[k].private_ip

}

}

description = "Server nodes details"

}

output "load_balancer" {

value = hcloud_load_balancer.load_balancer.ipv4

description = "Load balancer IP address"

}

Note that the first client node is commented. We'll add it after server nodes for Consul and Nomad are created and configured. Also both ipv6 and ipv4 addresses are enabled. I was trying only to use ipv6 but didn't succeed (yet).

You will need to create another file containing the secrets and the values of the variables. Let's do that. Create a new file dev.auto.tfvars with content

hetzner_token = "<TOKEN>"

generate_ssh_key_file = true

project = "project"

And that's it! Surprisingly. It doesn't take hours to figure out why the load balancer is showing an unhealthy status. A few more files are needed. One might notice, that I'm using null_resource in order to setup nodes. Here they are: common_setup.sh:

#!/bin/bash

export DEBIAN_FRONTEND=noninteractive

curl -fsSL https://apt.releases.hashicorp.com/gpg | apt-key add -

apt-add-repository "deb [arch=$(dpkg --print-architecture)] https://apt.releases.hashicorp.com $(lsb_release -cs) main"

apt-get update

apt-get upgrade -y

apt-get install jq -y

apt-get install -y consul=${CONSUL_VERSION}

apt-get install -y nomad=${NOMAD_VERSION}

chown -R consul:consul /etc/consul.d

chmod -R 640 /etc/consul.d/*

chown -R nomad:nomad /etc/nomad.d

chmod -R 640 /etc/nomad.d/*

cat <<EOF > /etc/nomad.d/nomad.hcl

datacenter = "dc1"

data_dir = "/opt/nomad"

EOF

server_setup.sh:

cat <<EOF > /etc/consul.d/consul.hcl

datacenter = "dc1"

data_dir = "/opt/consul"

connect {

enabled = true

}

client_addr = "0.0.0.0"

ui_config {

enabled = true

}

retry_join = ${SERVER_IPs}

bind_addr = "{{ GetPrivateInterfaces | include \"network\" \"${IP_RANGE}\" | attr \"address\" }}"

acl = {

enabled = true

default_policy = "allow"

down_policy = "extend-cache"

}

performance {

raft_multiplier = 1

}

EOF

consul validate /etc/consul.d/consul.hcl

cat <<EOF > /etc/consul.d/server.hcl

server = true

bootstrap_expect = ${SERVER_COUNT}

EOF

cat <<EOF > /etc/nomad.d/server.hcl

server {

enabled = true

bootstrap_expect = ${SERVER_COUNT}

}

acl {

%{ if ENABLE_NOMAD_ACLs }enabled = true%{ else }enabled = false%{ endif }

}

EOF

systemctl enable consul

systemctl enable nomad

systemctl start consul

systemctl start nomad

client_setup.sh:

cat <<EOF > /etc/consul.d/consul.hcl

datacenter = "dc1"

data_dir = "/opt/consul"

connect {

enabled = true

}

retry_join = ${SERVER_IPs}

bind_addr = "{{ GetPrivateInterfaces | include \"network\" \"${IP_RANGE}\" | attr \"address\" }}"

check_update_interval = "0s"

acl = {

enabled = true

default_policy = "allow"

down_policy = "extend-cache"

}

performance {

raft_multiplier = 1

}

EOF

cat <<EOF > /etc/nomad.d/client.hcl

client {

enabled = true

network_interface = "{{ GetPrivateInterfaces | include \"network\" \"${IP_RANGE}\" | attr \"name\" }}"

}

acl {

%{ if ENABLE_NOMAD_ACLs }enabled = true%{ else }enabled = false%{ endif }

}

EOF

# Install CNI plugins

CNI_VERSION="v1.3.0"

curl -L -o cni-plugins.tgz "https://github.com/containernetworking/plugins/releases/download/$CNI_VERSION/cni-plugins-linux-$( [ $(uname -m) = aarch64 ] && echo arm64 || echo amd64)"-$CNI_VERSION.tgz

mkdir -p /opt/cni/bin

tar -C /opt/cni/bin -xzf cni-plugins.tgz

cat <<EOF > /etc/sysctl.d/10-consul.conf

net.bridge.bridge-nf-call-arptables = 1

net.bridge.bridge-nf-call-ip6tables = 1

net.bridge.bridge-nf-call-iptables = 1

EOF

# Install Docker Engine

export DEBIAN_FRONTEND=noninteractive

apt-get remove docker docker-engine docker.io containerd runc -y

apt-get install ca-certificates curl gnupg lsb-release -y

mkdir -p /etc/apt/keyrings

curl -fsSL https://download.docker.com/linux/ubuntu/gpg | sudo gpg --dearmor -o /etc/apt/keyrings/docker.gpg

echo "deb [arch=$(dpkg --print-architecture) signed-by=/etc/apt/keyrings/docker.gpg] https://download.docker.com/linux/ubuntu $(lsb_release -cs) stable" | sudo tee /etc/apt/sources.list.d/docker.list >/dev/null

apt-get update

apt-get install docker-ce docker-ce-cli containerd.io docker-compose-plugin -y

systemctl enable consul

systemctl enable nomad

systemctl start consul

systemctl start nomad

So, now run terraform init and terraform apply afterwards. It should give you the following:

❯ terraform apply

Terraform used the selected providers to generate the following execution plan. Resource

actions are indicated with the following symbols:

+ create

Terraform will perform the following actions:

# hcloud_load_balancer.load_balancer will be created

+ resource "hcloud_load_balancer" "load_balancer" {

+ delete_protection = false

+ id = (known after apply)

+ ipv4 = (known after apply)

+ ipv6 = (known after apply)

+ labels = (known after apply)

+ load_balancer_type = "lb11"

+ location = "fsn1"

+ name = "project-v1-load-balancer"

+ network_id = (known after apply)

+ network_ip = (known after apply)

+ network_zone = (known after apply)

}

# hcloud_load_balancer_network.server_network_lb will be created

+ resource "hcloud_load_balancer_network" "server_network_lb" {

+ enable_public_interface = true

+ id = (known after apply)

+ ip = "10.0.0.2"

+ load_balancer_id = (known after apply)

+ network_id = (known after apply)

}

# hcloud_load_balancer_service.load_balancer_service will be created

+ resource "hcloud_load_balancer_service" "load_balancer_service" {

+ destination_port = 4646

+ id = (known after apply)

+ listen_port = 80

+ load_balancer_id = (known after apply)

+ protocol = "http"

+ proxyprotocol = (known after apply)

+ health_check {

+ interval = 10

+ port = 4646

+ protocol = "http"

+ retries = 3

+ timeout = 5

+ http {

+ path = "/v1/status/leader"

+ status_codes = [

+ "2??",

+ "3??",

]

}

}

+ http {

+ certificates = (known after apply)

+ cookie_lifetime = (known after apply)

+ cookie_name = (known after apply)

+ redirect_http = (known after apply)

+ sticky_sessions = true

}

}

# hcloud_load_balancer_target.load_balancer_target["0"] will be created

+ resource "hcloud_load_balancer_target" "load_balancer_target" {

+ id = (known after apply)

+ load_balancer_id = (known after apply)

+ server_id = (known after apply)

+ type = "server"

+ use_private_ip = true

}

# hcloud_load_balancer_target.load_balancer_target["1"] will be created

+ resource "hcloud_load_balancer_target" "load_balancer_target" {

+ id = (known after apply)

+ load_balancer_id = (known after apply)

+ server_id = (known after apply)

+ type = "server"

+ use_private_ip = true

}

# hcloud_load_balancer_target.load_balancer_target["2"] will be created

+ resource "hcloud_load_balancer_target" "load_balancer_target" {

+ id = (known after apply)

+ load_balancer_id = (known after apply)

+ server_id = (known after apply)

+ type = "server"

+ use_private_ip = true

}

# hcloud_network.private_network will be created

+ resource "hcloud_network" "private_network" {

+ delete_protection = false

+ expose_routes_to_vswitch = false

+ id = (known after apply)

+ ip_range = "10.0.0.0/16"

+ name = "project-v1-network"

}

# hcloud_network_subnet.network will be created

+ resource "hcloud_network_subnet" "network" {

+ gateway = (known after apply)

+ id = (known after apply)

+ ip_range = "10.0.0.0/16"

+ network_id = (known after apply)

+ network_zone = "eu-central"

+ type = "cloud"

}

# hcloud_server.nodes["0"] will be created

+ resource "hcloud_server" "nodes" {

+ allow_deprecated_images = false

+ backup_window = (known after apply)

+ backups = false

+ datacenter = (known after apply)

+ delete_protection = false

+ firewall_ids = (known after apply)

+ id = (known after apply)

+ ignore_remote_firewall_ids = false

+ image = "ubuntu-22.04"

+ ipv4_address = (known after apply)

+ ipv6_address = (known after apply)

+ ipv6_network = (known after apply)

+ keep_disk = false

+ labels = {

+ "project-v1-server-node" = "any"

}

+ location = "fsn1"

+ name = "project-v1-server-0"

+ rebuild_protection = false

+ server_type = "cax11"

+ ssh_keys = (known after apply)

+ status = (known after apply)

+ user_data = "SqZbbDt2vm3CPq7vgujpjTiEYrA="

+ network {

+ alias_ips = (known after apply)

+ ip = "10.0.0.3"

+ mac_address = (known after apply)

+ network_id = (known after apply)

}

+ public_net {

+ ipv4 = (known after apply)

+ ipv4_enabled = true

+ ipv6 = (known after apply)

+ ipv6_enabled = true

}

}

# hcloud_server.nodes["1"] will be created

+ resource "hcloud_server" "nodes" {

+ allow_deprecated_images = false

+ backup_window = (known after apply)

+ backups = false

+ datacenter = (known after apply)

+ delete_protection = false

+ firewall_ids = (known after apply)

+ id = (known after apply)

+ ignore_remote_firewall_ids = false

+ image = "ubuntu-22.04"

+ ipv4_address = (known after apply)

+ ipv6_address = (known after apply)

+ ipv6_network = (known after apply)

+ keep_disk = false

+ labels = {

+ "project-v1-server-node" = "any"

}

+ location = "fsn1"

+ name = "project-v1-server-1"

+ rebuild_protection = false

+ server_type = "cax11"

+ ssh_keys = (known after apply)

+ status = (known after apply)

+ user_data = "SqZbbDt2vm3CPq7vgujpjTiEYrA="

+ network {

+ alias_ips = (known after apply)

+ ip = "10.0.0.4"

+ mac_address = (known after apply)

+ network_id = (known after apply)

}

+ public_net {

+ ipv4 = (known after apply)

+ ipv4_enabled = true

+ ipv6 = (known after apply)

+ ipv6_enabled = true

}

}

# hcloud_server.nodes["2"] will be created

+ resource "hcloud_server" "nodes" {

+ allow_deprecated_images = false

+ backup_window = (known after apply)

+ backups = false

+ datacenter = (known after apply)

+ delete_protection = false

+ firewall_ids = (known after apply)

+ id = (known after apply)

+ ignore_remote_firewall_ids = false

+ image = "ubuntu-22.04"

+ ipv4_address = (known after apply)

+ ipv6_address = (known after apply)

+ ipv6_network = (known after apply)

+ keep_disk = false

+ labels = {

+ "project-v1-server-node" = "any"

}

+ location = "fsn1"

+ name = "project-v1-server-2"

+ rebuild_protection = false

+ server_type = "cax11"

+ ssh_keys = (known after apply)

+ status = (known after apply)

+ user_data = "SqZbbDt2vm3CPq7vgujpjTiEYrA="

+ network {

+ alias_ips = (known after apply)

+ ip = "10.0.0.5"

+ mac_address = (known after apply)

+ network_id = (known after apply)

}

+ public_net {

+ ipv4 = (known after apply)

+ ipv4_enabled = true

+ ipv6 = (known after apply)

+ ipv6_enabled = true

}

}

# hcloud_server_network.server_network["0"] will be created

+ resource "hcloud_server_network" "server_network" {

+ id = (known after apply)

+ ip = "10.0.0.3"

+ mac_address = (known after apply)

+ network_id = (known after apply)

+ server_id = (known after apply)

}

# hcloud_server_network.server_network["1"] will be created

+ resource "hcloud_server_network" "server_network" {

+ id = (known after apply)

+ ip = "10.0.0.4"

+ mac_address = (known after apply)

+ network_id = (known after apply)

+ server_id = (known after apply)

}

# hcloud_server_network.server_network["2"] will be created

+ resource "hcloud_server_network" "server_network" {

+ id = (known after apply)

+ ip = "10.0.0.5"

+ mac_address = (known after apply)

+ network_id = (known after apply)

+ server_id = (known after apply)

}

# hcloud_ssh_key.ssh_key will be created

+ resource "hcloud_ssh_key" "ssh_key" {

+ fingerprint = (known after apply)

+ id = (known after apply)

+ name = "project-v1-ssh-key"

+ public_key = (known after apply)

}

# local_file.private_key[0] will be created

+ resource "local_file" "private_key" {

+ content = (sensitive value)

+ content_base64sha256 = (known after apply)

+ content_base64sha512 = (known after apply)

+ content_md5 = (known after apply)

+ content_sha1 = (known after apply)

+ content_sha256 = (known after apply)

+ content_sha512 = (known after apply)

+ directory_permission = "0777"

+ file_permission = "0600"

+ filename = "./machines.pem"

+ id = (known after apply)

}

# null_resource.node_setup["0"] will be created

+ resource "null_resource" "node_setup" {

+ id = (known after apply)

+ triggers = {

+ "vm" = (known after apply)

}

}

# null_resource.node_setup["1"] will be created

+ resource "null_resource" "node_setup" {

+ id = (known after apply)

+ triggers = {

+ "vm" = (known after apply)

}

}

# null_resource.node_setup["2"] will be created

+ resource "null_resource" "node_setup" {

+ id = (known after apply)

+ triggers = {

+ "vm" = (known after apply)

}

}

# tls_private_key.machines will be created

+ resource "tls_private_key" "machines" {

+ algorithm = "ED25519"

+ ecdsa_curve = "P224"

+ id = (known after apply)

+ private_key_openssh = (sensitive value)

+ private_key_pem = (sensitive value)

+ private_key_pem_pkcs8 = (sensitive value)

+ public_key_fingerprint_md5 = (known after apply)

+ public_key_fingerprint_sha256 = (known after apply)

+ public_key_openssh = (known after apply)

+ public_key_pem = (known after apply)

+ rsa_bits = 4096

}

Plan: 20 to add, 0 to change, 0 to destroy.

Changes to Outputs:

+ load_balancer = (known after apply)

+ server_nodes = {

+ "0" = {

+ internal_ip = "10.0.0.3"

+ ipv4_address = (known after apply)

+ ipv6_address = (known after apply)

+ name = "project-v1-server-0"

}

+ "1" = {

+ internal_ip = "10.0.0.4"

+ ipv4_address = (known after apply)

+ ipv6_address = (known after apply)

+ name = "project-v1-server-1"

}

+ "2" = {

+ internal_ip = "10.0.0.5"

+ ipv4_address = (known after apply)

+ ipv6_address = (known after apply)

+ name = "project-v1-server-2"

}

}

Do you want to perform these actions?

Terraform will perform the actions described above.

Only 'yes' will be accepted to approve.

Enter a value: yes

After applying you'll see something like this:

Apply complete! Resources: 20 added, 0 changed, 0 destroyed.

Outputs:

load_balancer = "167.235.216.87"

server_nodes = {

"0" = {

"internal_ip" = "10.0.0.3"

"ipv4_address" = "157.90.254.208"

"ipv6_address" = "2a01:4f8:c012:5c52::1"

"name" = "project-v1-server-0"

}

"1" = {

"internal_ip" = "10.0.0.4"

"ipv4_address" = "49.12.185.237"

"ipv6_address" = "2a01:4f8:c17:882f::1"

"name" = "project-v1-server-1"

}

"2" = {

"internal_ip" = "10.0.0.5"

"ipv4_address" = "91.107.211.222"

"ipv6_address" = "2a01:4f8:c012:4c27::1"

"name" = "project-v1-server-2"

}

}

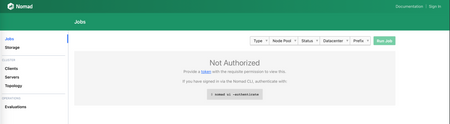

Take a note for the IP address of the load balancer. If everything works well, you should see the Nomad console after navigating:

If you wonder, where to get token to login, then you are in the same boat, where I was. Getting it is easy with fresh setup - just

❯ curl --request POST http://167.235.216.87/v1/acl/bootstrap | jq -r -R 'fromjson? | .SecretID?'

% Total % Received % Xferd Average Speed Time Time Time Current

Dload Upload Total Spent Left Speed

100 366 100 366 0 0 7945 0 --:--:-- --:--:-- --:--:-- 9891

<HERE_IS_THE_TOKEN>

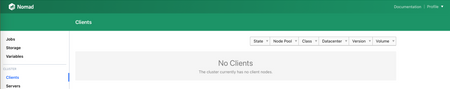

You should be able to login with that token. But wait, there are no client nodes!

Next step is to add a client node. Remember the commented code in main.tf? Uncomment that part:

3 = {

private_ip = "10.0.0.6"

location = "fsn1"

type = "client"

}

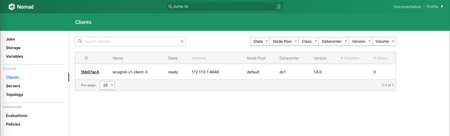

And apply terraform again. It will create a client node. You'll see it in the Nomad console:

So now everything is in place for the experiments to begin. If you don't need the infrastructure anymore, terraform destroy is your friend.

That's all, folks!